Boto3 Download File To Memory

As i mentioned, boto3 has a very simple api, especially for amazon s3. Do a quick check on the stream so we get what we want.

Automation On Ebs Volumes Using Aws Boto3 Lambda By Sumit Purandare Medium

Boto3 s3 upload, download and list files (python 3) the first thing we need to do is click on create bucket and just fill in the details as shown below.

Boto3 download file to memory. Pass the data stream to boto3 to upload it to s3. First, i can create the same data as you if i only make a single api call: Import boto3 client = boto3.client ('s3') @profile def foo ():

I am trying to write an aws lambda function that gzips all content uploaded to s3. Large enough to throw out of memory errors in python. The first, and easiest, is to download the entire file into ram and work with it there.

You may still have an issue with very large files, and will have to configure your lambda with enough memory to hold the entire. Import zipfile import boto3 s3 = boto3. What is the concept behind file pointer or stream pointer.

Now enter your details as: Download file from s3 using boto3. Using the boto3 library with amazon simple storage service (s3) allows you to create, update, and delete s3.

Downloading a file from s3 to memory using boto3. First, you need to install the awscli module using pip: R = client.get_object (bucket='mybucket', key='key') contents = r ['body'].read () return contents foo ()

Iterating and processing csv file contents. Use the aws sdk for python (aka boto) to download a file from an s3 bucket. The goal is to download a file from the internet, and create from it a file object, or a file like object without ever having it touch the hard drive.

Working with binary data in python. You can also give a name that is different from the object name. If compressed_fp is none, the compression is performed in memory.

Gzipfile ( fileobj=compressed_fp, mode='wb') as gz: Using the python module boto3, let me say that again, using boto3, not boto. Running boto3.resource(s3).bucket(some bucket).objects.filter() and boto3.resource(s3).bucket(some bucket).download_file() increases memory footprint of python script, whether or not the file is deleted afterwards locally.

Create a yum repo based on the image manifest content. Boto3 is the python sdk for amazon web services (aws) that allows you to manage aws services in a programmatic way from your applications and services. I am trying to process a large file from s3 and to avoid consuming large memory, using get_object to stream the data from file in chunks, process it,.

Creating a boto3 user in aws for programmatic access. If your file is existing as a. The actual code flow when copying a file to s3 is the following 3 steps:

This is just for my knowledge, wanting to know if its possible or practical, particularly because i would like to see. Here's an example of what i'm seeing. Type annotations for boto3.ram 1.19.0 service compatible with vscode, pycharm, emacs, sublime text, mypy, pyright and other tools.

Downloading a file from an s3 bucket — boto 3 docs 1.9.42 documentation navigation But subsequent requests should not see any memory increases. Gzip compressing files for s3 uploads with boto3.

I would suggest using io module to read the file directly in to memory, without having to use a temporary file at all. How to get an object. Show activity on this post.

Opening up a stream connection to the file we want to download. For now these options are not very important we just want to get started and programmatically interact with our setup. I do observe the same issue in a slightly different context when downloading larger files (10gb+) in docker containers with a hard limit on memory, with a single boto3 session and no multithreaded invocation of object.download_file (the code is very similar to #1670 (comment)).

If compressed_fp is none, the compression is performed in memory. The iter_lines method of the body response from boto3 allows us to. For aws configuration, run the following command:

Configuring boto3 and boto3 user credentials. You can do the same things that you’re doing in your aws console and even more, but in a faster, repeated, and automated way. Using io.bufferedreader on a stream obtained with open.

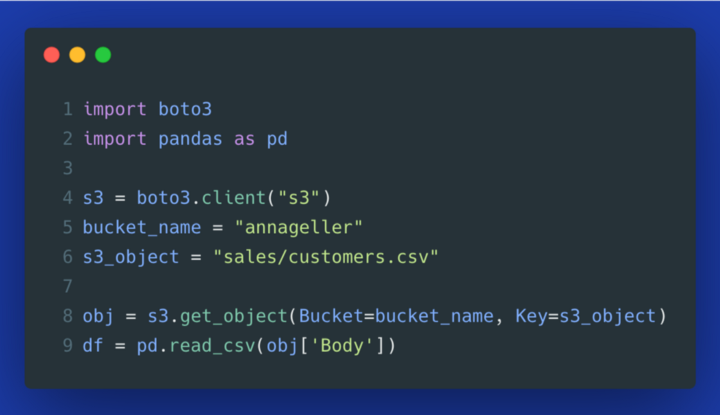

For example, it is quite common to deal with the csv files and you want to read them as pandas dataframes.let’s see how we can get the file01.txt which is under the mytxt key. In this tutorial, we will introduce you how to upload and download files in aws s3 with python and boto3. So let’s try passing that into zipfile:

To process the csv file, we have to iterate through the contents of the s3 object. To download files from amazon s3, you can use the python boto3 module. Compress and upload the contents from fp to s3.

The whole process had to look something like this. Instead of downloading an object, you can read it directly. Read binary file and loop over each byte.

How To Upload And Download Files In Aws S3 With Python And Boto3 The Coding Interface

Boto3 How To Use Any Aws Service With Python - Dashbird

How To Upload And Download Files In Aws S3 With Python And Boto3 The Coding Interface

Download S3 Objects With Python And Boto 3

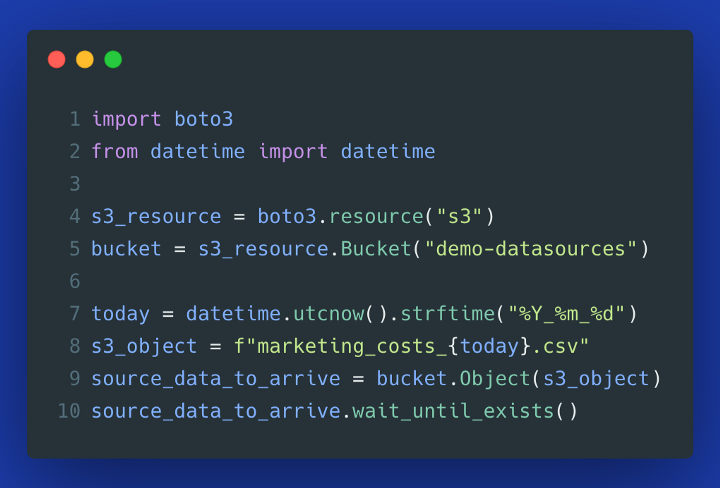

8 Must-know Tricks To Use S3 More Effectively In Python - Dashbird

How To Upload And Download Files In Aws S3 With Python And Boto3 The Coding Interface

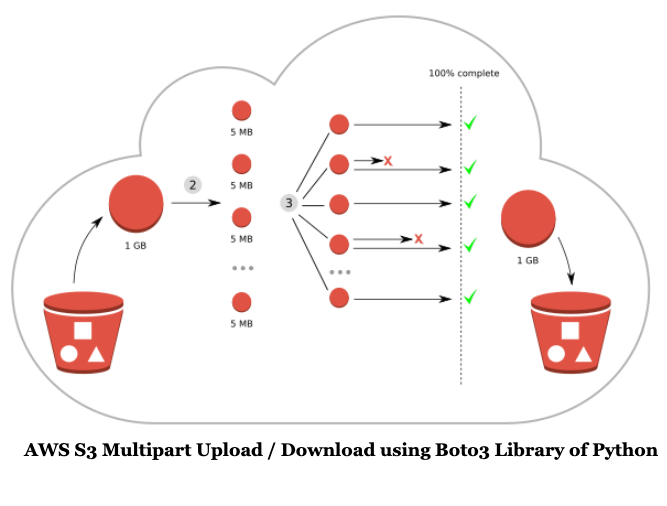

Aws S3 Multipart Upload With Python And Boto3 By Niyazi Erdogan Medium

Boto3 How To Use Any Aws Service With Python - Dashbird

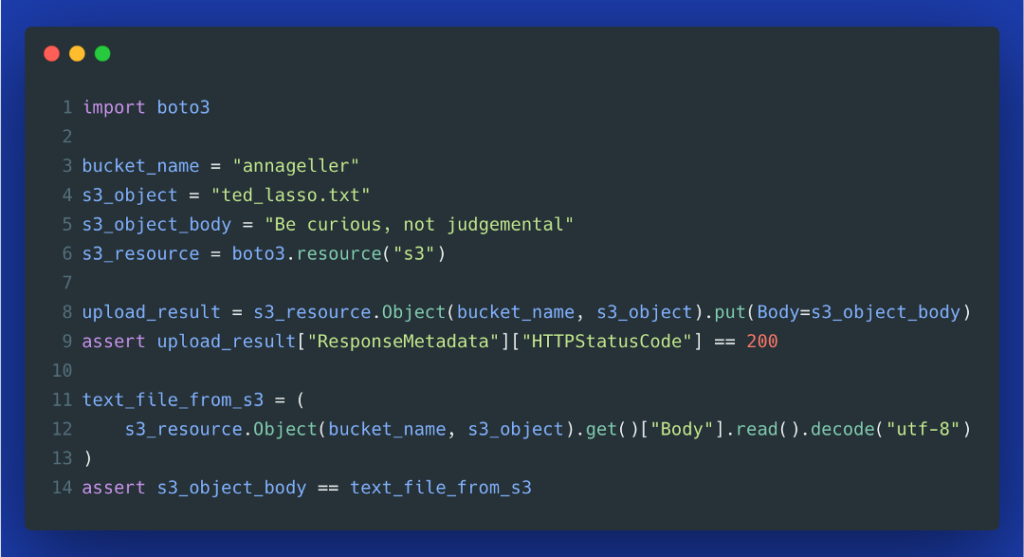

Working With S3 In Python Using Boto3

8 Must-know Tricks To Use S3 More Effectively In Python - Dashbird

How To Upload And Download Files In Aws S3 With Python And Boto3 The Coding Interface

Simple Tutorial Python Boto3 And Aws S3 - Structilmy

Aws And Python The Boto3 Package By The Data Detective Towards Data Science

How To Upload And Download Files In Aws S3 With Python And Boto3 The Coding Interface

Aws Sdk For Python Boto3 Cheat Sheet Code Snippets

Aws S3 Multipart Uploaddownload Using Boto3 Python Sdk By Ankhipaul Analytics Vidhya Medium

Working With Kms In Python Using Boto3

A Basic Introduction To Boto3 Predictive Hacks

How To Use Boto3 To Load Your Pickle Files By Noopur R K Medium